Platform

Engineering Service

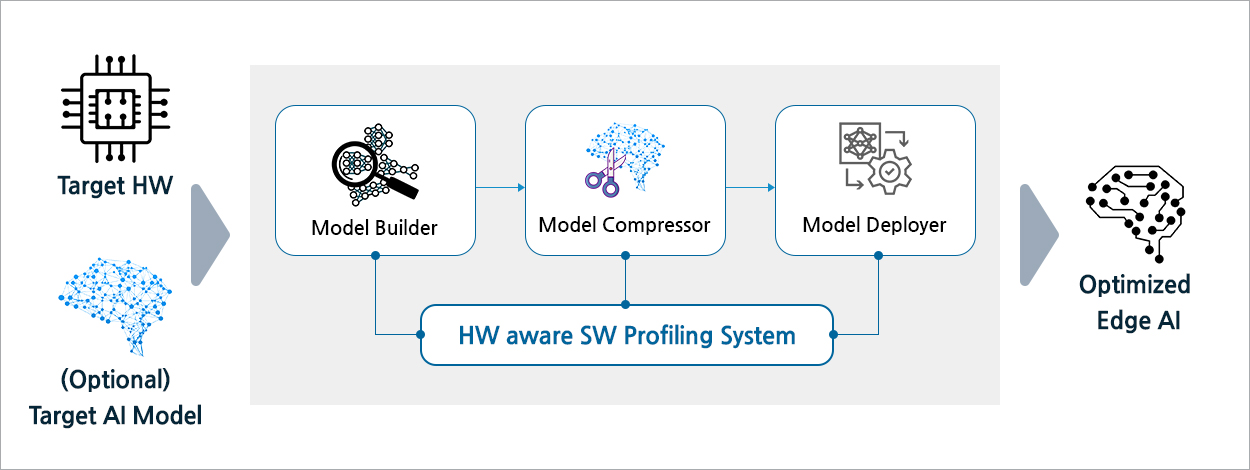

Opt-AI provides engineering services and software platforms for optimizing large AI models on resource-constrained edge devices.

This includes model building, model compression, and embedded system optimization technologies

Model Building

-

Hardware-friendly redesigning of AI models for target edge devices

-

Providing hardware and AI model recommendations, performance profiling, and automatic model tuning

Model Compression

-

Reducing model size while improving inference speed and maintaining accuracy

-

Providing quantization, pruning, and knowledge distillation

Embedded System Optimization

-

Porting (C++) and optimizing embedded systems

-

Providing parallel processing techniques for inference scheduling and accelerated computing

On-device AI Software Platform

Opt-AI’s goal is to support customers in securing AI inference performance, reducing development costs, and accelerating market launch by providing ① model building, ② model compression, ③ embedded system optimization technologies, and ④ a HW-aware SW analysis system that can automatically perform optimization in various HW environments for the deployment of on-device AI.

Module

-

Model Builder + Model Compressor + Model Deployer

-

HW-aware SW analysis system

Benefit

-

Securing performance on specific HW + reducing costs + accelerating market launch

-

Minimizing additional development costs due to hardware changes

Core Technology

Opt-AI ensures effective performance on any hardware and processor through our HW-aware SW Profiling System.

We support customers in focusing solely on model development by building development workflows specific to target hardware

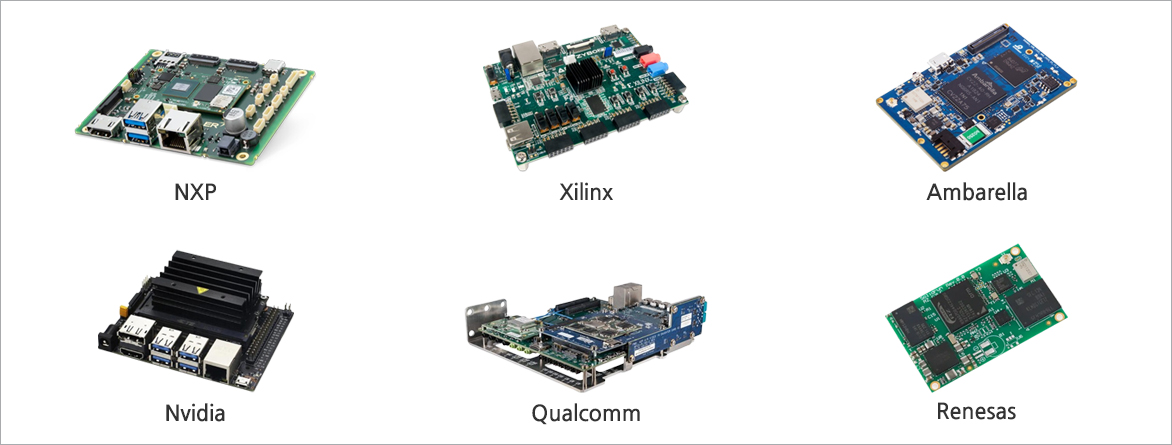

Supported Hardware

-

Qualcomm, Ambarella, Renesas, NXP, NVIDIA, Xilinx, Rockchip, Coral

Supported Processor

-

CPU, GPU, NPU, FPGA

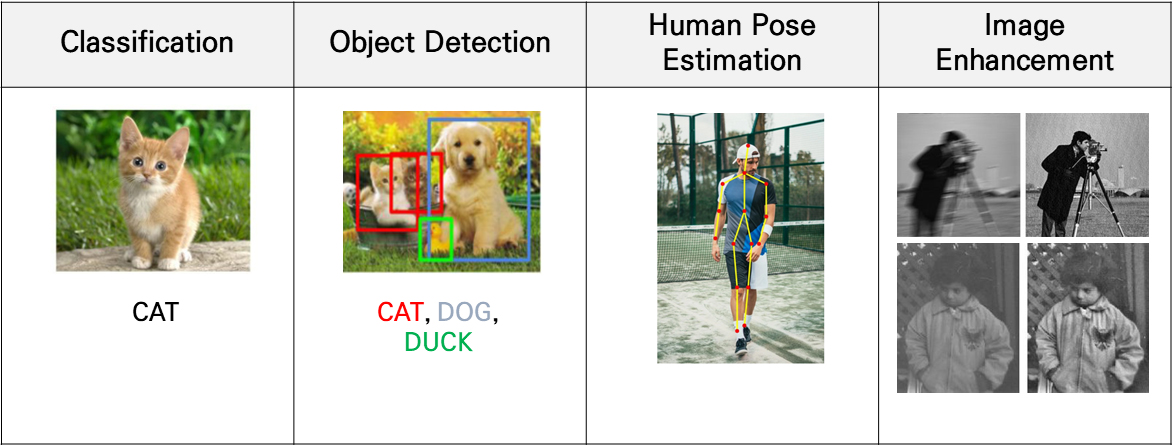

Supported Task

-

Classification, Object Detection, Human Pose Estimation, Image Enhancement